Shinobilifeonline

Add a review FollowOverview

-

Founded Date December 7, 1905

-

Sectors Office

-

Posted Jobs 0

-

Viewed 39

Company Description

Nvidia Stock May Fall as DeepSeek’s ‘Amazing’ AI Model Disrupts OpenAI

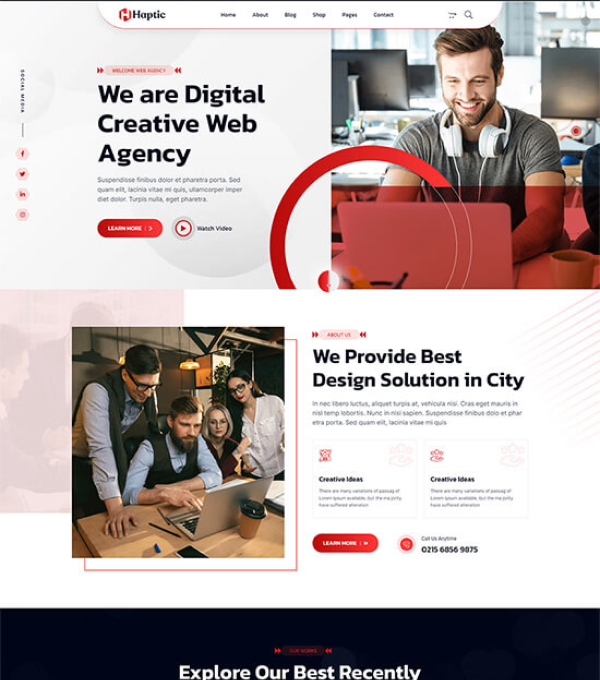

HANGZHOU, CHINA – JANUARY 25, 2025 – The logo of Chinese synthetic intelligence business DeepSeek is … [+] seen in Hangzhou, Zhejiang province, China, January 26, 2025. (Photo credit should check out CFOTO/Future Publishing through Getty Images)

America’s policy of limiting Chinese access to Nvidia’s most sophisticated AI chips has actually accidentally assisted a Chinese AI developer leapfrog U.S. rivals who have complete access to the company’s most current chips.

This shows a fundamental reason that startups are often more effective than large companies: Scarcity spawns innovation.

A case in point is the Chinese AI Model DeepSeek R1 – a complicated analytical design completing with OpenAI’s o1 – which “zoomed to the international top 10 in efficiency” – yet was developed much more rapidly, with fewer, less powerful AI chips, at a much lower expense, according to the Wall Street Journal.

The success of R1 ought to benefit enterprises. That’s since companies see no reason to pay more for an efficient AI design when a more affordable one is readily available – and is most likely to improve more quickly.

“OpenAI’s model is the finest in efficiency, however we also do not wish to pay for capacities we do not require,” Anthony Poo, co-founder of a Silicon Valley-based start-up using generative AI to forecast financial returns, told the Journal.

Last September, Poo’s business shifted from Anthropic’s Claude to DeepSeek after tests showed DeepSeek “performed likewise for around one-fourth of the expense,” noted the Journal. For example, Open AI charges $20 to $200 monthly for its services while DeepSeek makes its platform available at no charge to specific users and “charges only $0.14 per million tokens for designers,” reported Newsweek.

Gmail Security Warning For 2.5 Billion Users-AI Hack Confirmed

When my book, Brain Rush, was released last summertime, I was worried that the future of generative AI in the U.S. was too depending on the largest innovation business. I contrasted this with the imagination of U.S. startups during the dot-com boom – which generated 2,888 initial public offerings (compared to zero IPOs for U.S. generative AI startups).

DeepSeek’s success could encourage new competitors to U.S.-based large language design developers. If these start-ups construct powerful AI designs with fewer chips and get improvements to market quicker, Nvidia revenue could grow more slowly as LLM developers duplicate DeepSeek’s strategy of utilizing fewer, less sophisticated AI chips.

“We’ll decrease comment,” wrote an Nvidia representative in a January 26 e-mail.

DeepSeek’s R1: Excellent Performance, Lower Cost, Shorter Development Time

DeepSeek has impressed a leading U.S. endeavor capitalist. “Deepseek R1 is one of the most remarkable and impressive advancements I’ve ever seen,” Silicon Valley endeavor capitalist Marc Andreessen composed in a January 24 post on X.

To be reasonable, DeepSeek’s innovation lags that of U.S. rivals such as OpenAI and Google. However, the business’s R1 design – which released January 20 – “is a close competing regardless of utilizing fewer and less-advanced chips, and in some cases avoiding actions that U.S. designers considered important,” kept in mind the Journal.

Due to the high cost to release generative AI, enterprises are progressively questioning whether it is possible to earn a positive roi. As I composed last April, more than $1 trillion could be invested in the innovation and a killer app for the AI chatbots has yet to emerge.

Therefore, businesses are about the prospects of decreasing the investment required. Since R1’s open source design works so well and is so much more economical than ones from OpenAI and Google, enterprises are acutely interested.

How so? R1 is the top-trending model being downloaded on HuggingFace – 109,000, according to VentureBeat, and matches “OpenAI’s o1 at simply 3%-5% of the expense.” R1 likewise provides a search function users judge to be superior to OpenAI and Perplexity “and is just matched by Google’s Gemini Deep Research,” kept in mind VentureBeat.

DeepSeek established R1 more rapidly and at a much lower cost. DeepSeek stated it trained one of its latest models for $5.6 million in about 2 months, noted CNBC – far less than the $100 million to $1 billion variety Anthropic CEO Dario Amodei pointed out in 2024 as the expense to train its models, the Journal reported.

To train its V3 design, DeepSeek used a cluster of more than 2,000 Nvidia chips “compared with 10s of thousands of chips for training designs of similar size,” noted the Journal.

Independent analysts from Chatbot Arena, a platform hosted by UC Berkeley researchers, rated V3 and R1 designs in the top 10 for chatbot performance on January 25, the Journal composed.

The CEO behind DeepSeek is Liang Wenfeng, who manages an $8 billion hedge fund. His hedge fund, named High-Flyer, utilized AI chips to build algorithms to determine “patterns that could impact stock rates,” kept in mind the Financial Times.

Liang’s outsider status helped him succeed. In 2023, he introduced DeepSeek to develop human-level AI. “Liang developed an exceptional infrastructure team that actually understands how the chips worked,” one founder at a rival LLM business informed the Financial Times. “He took his finest individuals with him from the hedge fund to DeepSeek.”

DeepSeek benefited when Washington banned Nvidia from exporting H100s – Nvidia’s most powerful chips – to China. That required local AI companies to engineer around the deficiency of the limited computing power of less powerful local chips – Nvidia H800s, according to CNBC.

The H800 chips transfer information between chips at half the H100’s 600-gigabits-per-second rate and are generally cheaper, according to a Medium post by Nscale chief industrial officer Karl Havard. Liang’s group “already understood how to solve this problem,” kept in mind the Financial Times.

To be reasonable, DeepSeek said it had actually stockpiled 10,000 H100 chips prior to October 2022 when the U.S. enforced export controls on them, Liang informed Newsweek. It is uncertain whether DeepSeek utilized these H100 chips to establish its designs.

Microsoft is extremely satisfied with DeepSeek’s accomplishments. “To see the DeepSeek’s brand-new design, it’s extremely outstanding in terms of both how they have actually actually effectively done an open-source model that does this inference-time compute, and is super-compute efficient,” CEO Satya Nadella said January 22 at the World Economic Forum, according to a CNBC report. “We must take the advancements out of China really, very seriously.”

Will DeepSeek’s Breakthrough Slow The Growth In Demand For Nvidia Chips?

DeepSeek’s success should stimulate changes to U.S. AI policy while making Nvidia financiers more mindful.

U.S. export restrictions to Nvidia put pressure on start-ups like DeepSeek to prioritize performance, resource-pooling, and collaboration. To develop R1, DeepSeek re-engineered its training process to utilize Nvidia H800s’ lower processing speed, previous DeepSeek worker and existing Northwestern University computer technology Ph.D. trainee Zihan Wang told MIT Technology Review.

One Nvidia scientist was passionate about DeepSeek’s accomplishments. DeepSeek’s paper reporting the results revived memories of pioneering AI programs that mastered parlor game such as chess which were developed “from scratch, without imitating human grandmasters initially,” senior Nvidia research study scientist Jim Fan said on X as featured by the Journal.

Will DeepSeek’s success throttle Nvidia’s development rate? I do not understand. However, based upon my research study, organizations clearly want effective generative AI designs that return their investment. Enterprises will have the ability to do more experiments aimed at discovering high-payoff generative AI applications, if the expense and time to build those applications is lower.

That’s why R1’s lower expense and shorter time to carry out well ought to continue to draw in more business interest. An essential to delivering what companies desire is DeepSeek’s skill at optimizing less effective GPUs.

If more start-ups can replicate what DeepSeek has accomplished, there could be less require for Nvidia’s most pricey chips.

I do not understand how Nvidia will react should this happen. However, in the short run that might indicate less revenue development as start-ups – following DeepSeek’s method – develop models with fewer, lower-priced chips.