Thesevenoaksanimator

Add a review FollowOverview

-

Founded Date April 11, 2004

-

Sectors Office

-

Posted Jobs 0

-

Viewed 43

Company Description

Scientists Flock to DeepSeek: how They’re Utilizing the Blockbuster AI Model

Scientists are gathering to DeepSeek-R1, a low-cost and effective expert system (AI) ‘thinking’ design that sent the US stock exchange spiralling after it was released by a Chinese firm recently.

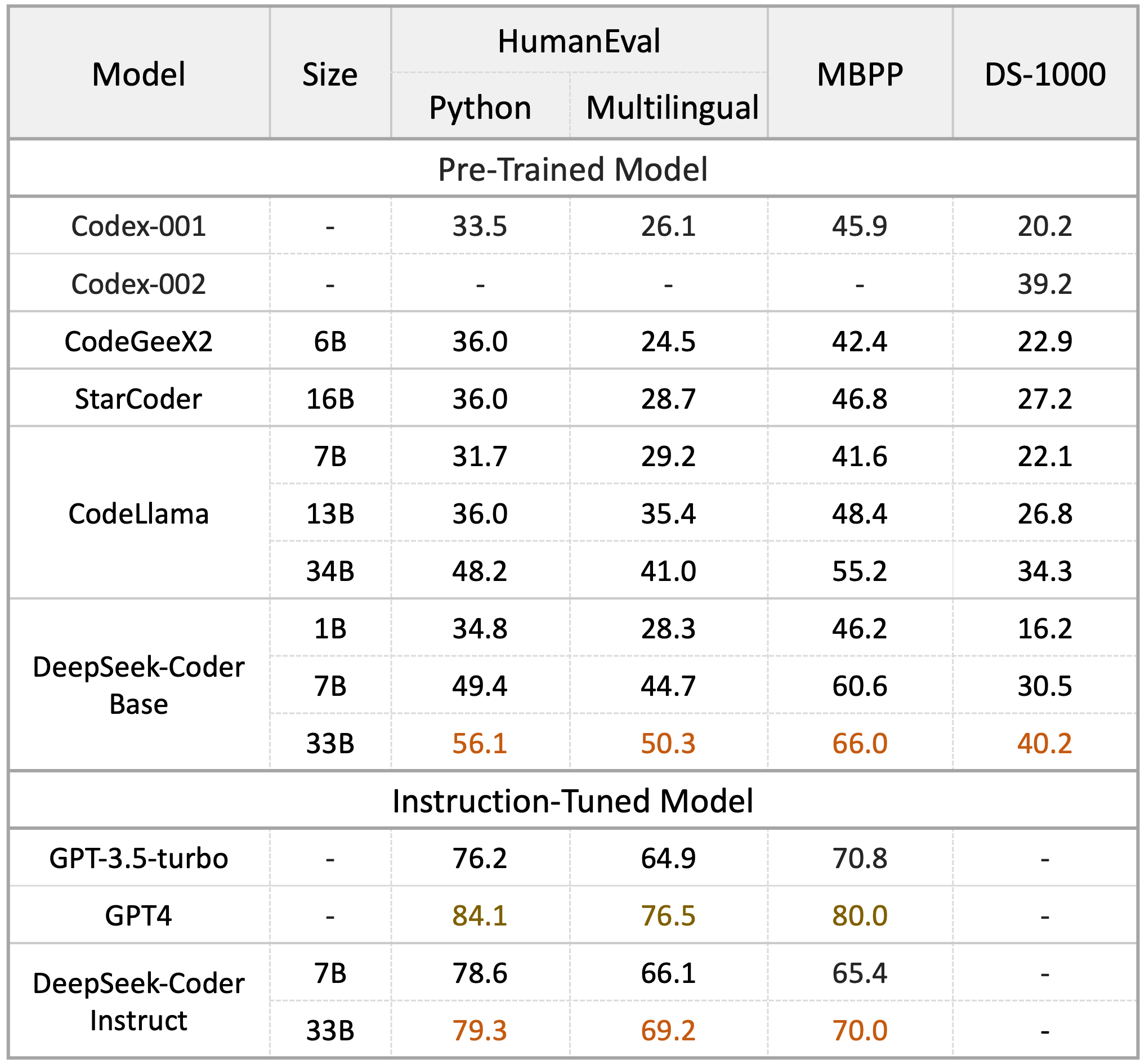

Repeated tests suggest that DeepSeek-R1‘s capability to fix mathematics and science problems matches that of the o1 design, released in September by OpenAI in San Francisco, California, whose thinking designs are thought about market leaders.

How China created AI model DeepSeek and shocked the world

Although R1 still fails on numerous jobs that scientists may desire it to perform, it is giving researchers worldwide the chance to train customized reasoning designs designed to fix issues in their disciplines.

“Based upon its piece de resistance and low expense, our company believe Deepseek-R1 will encourage more scientists to attempt LLMs in their everyday research study, without fretting about the expense,” states Huan Sun, an AI researcher at Ohio State in Columbus. “Almost every coworker and partner working in AI is talking about it.”

Open season

For scientists, R1’s cheapness and openness could be game-changers: using its application programming interface (API), they can query the model at a portion of the cost of exclusive competitors, or free of charge by utilizing its online chatbot, DeepThink. They can also download the design to their own servers and run and build on it totally free – which isn’t possible with completing closed models such as o1.

Since R1’s launch on 20 January, “lots of researchers” have been investigating training their own reasoning models, based on and motivated by R1, says Cong Lu, an AI scientist at the University of British Columbia in Vancouver, Canada. That’s supported by information from Hugging Face, an open-science repository for AI that hosts the DeepSeek-R1 code. In the week since its launch, the website had actually logged more than 3 million downloads of various variations of R1, consisting of those currently built on by independent users.

How does ChatGPT ‘believe’? Psychology and neuroscience fracture open AI big language designs

Scientific tasks

In initial tests of R1’s abilities on data-driven scientific jobs – taken from genuine documents in topics including bioinformatics, computational chemistry and cognitive neuroscience – the model matched o1’s efficiency, states Sun. Her group challenged both AI models to finish 20 jobs from a suite of problems they have produced, called the ScienceAgentBench. These consist of jobs such as analysing and picturing information. Both models solved just around one-third of the challenges correctly. Running R1 utilizing the API cost 13 times less than did o1, but it had a slower “believing” time than o1, notes Sun.

R1 is also showing promise in mathematics. Frieder Simon, a mathematician and computer scientist at the University of Oxford, UK, challenged both models to produce an evidence in the abstract field of functional analysis and discovered R1’s argument more appealing than o1’s. But considered that such designs make errors, to take advantage of them researchers require to be already armed with skills such as telling a great and bad evidence apart, he says.

Much of the excitement over R1 is due to the fact that it has actually been released as ‘open-weight’, indicating that the discovered connections between various parts of its algorithm are readily available to build on. Scientists who download R1, or one of the much smaller ‘distilled’ versions also released by DeepSeek, can enhance its efficiency in their field through additional training, known as fine tuning. Given an appropriate information set, researchers could train the model to enhance at coding jobs specific to the clinical process, says Sun.